Temperature is a widely measured real-world physical variable, but achieving credible results is harder than it seems, even for a simple device such as a kitchen oven.

What is temperature, anyway?

While we all “know” intuitively what temperature is, actually defining and calibrating its readings is a complicated discussion. A fundamentalist approach is to go back to the basics of physics and use sophisticated arrangements, often using lasers and optical techniques, to measure temperature. Keep in mind that what we call temperature is really a name we give to the motion of atoms and molecules.

With advanced instrumentation, it is possible to provide calibration of a temperature better than ±0.1º C, but this is not an easy challenge. There are many potential sources of error that must be accounted for and then compensated or adjusted in the data reduction of the final readings. As a result, these techniques are usually left to major metrology standards organizations such as the National Institute of Standards and Technology (NIST) in the US.

Note that even the definition of temperature is complex: In 1954, the basic kelvin unit (k) was defined as equal to the fraction 1⁄273.16 of the thermodynamic temperature of the triple point of water — the point at which water, ice, and water vapor co-exist in equilibrium (Figure 1). That is a valuable common reference because, for a precise formulation of water at a specific pressure, the triple point always occurs at exactly the same temperature: 273.16 K.

However, a major worldwide, multi-decade metrology goal under the SI system (in which NIST participates) has been to define all major units for standards including length, time, mass, and temperature in terms of units that could be reproduced anywhere, rather than by relying on comparison to an irreproducible, master physical standard. “Mass” was the last piece of the puzzle, with the master kilogram located in France now obsolete and mass being defined and replaced by a mass-balance arrangement.

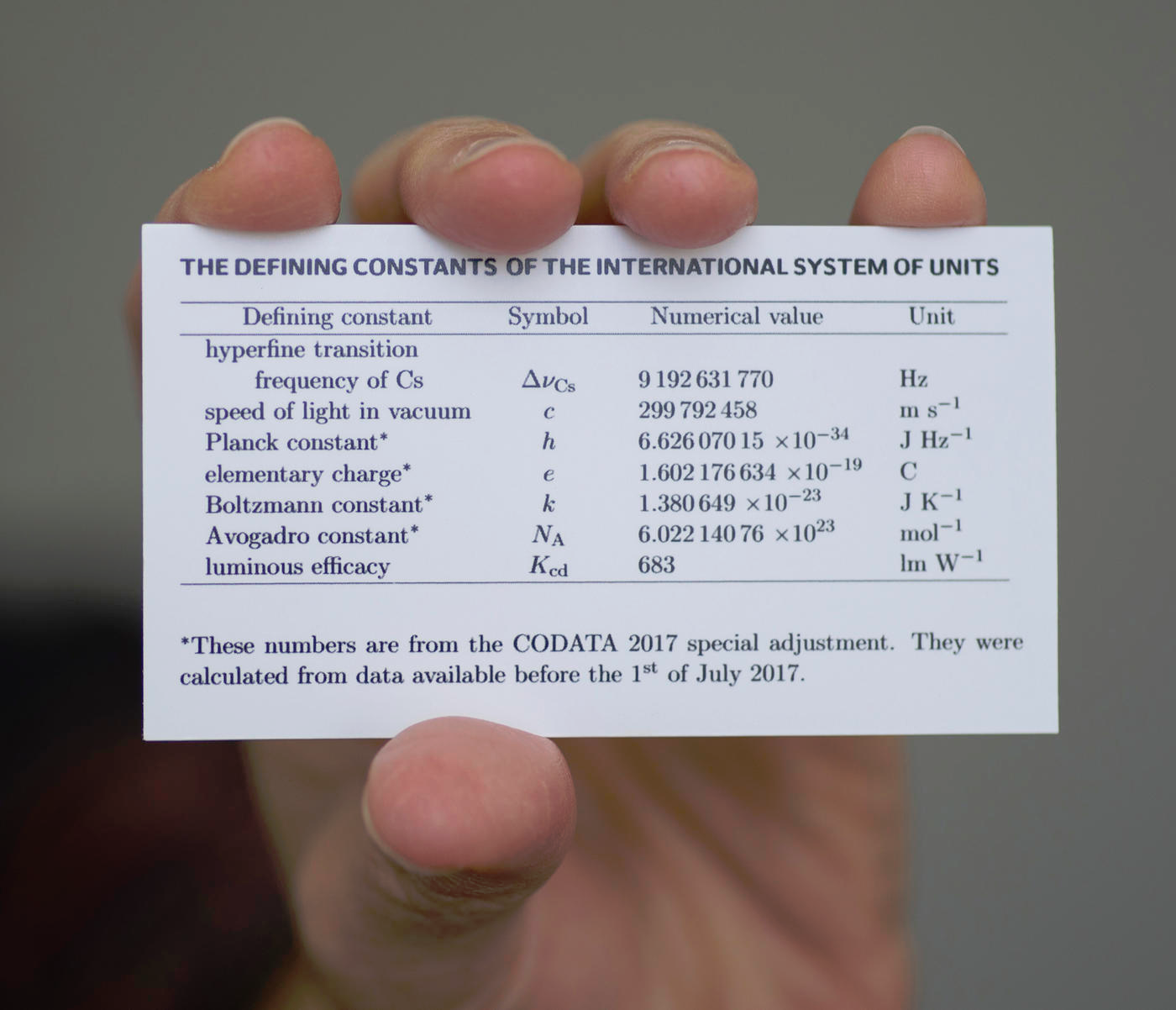

Now, the International System of Units (SI) is based on a foundation of seven defining constants: the cesium hyperfine splitting frequency, the speed of light in vacuum, the Planck constant, the elementary charge (i.e., the charge on a proton), the Boltzmann constant, the Avogadro constant, and the luminous efficacy of a specified monochromatic source (Figure 2). All other units, such as length or time, are defined in terms of these seven universal constants.

The kelvin has been redefined in terms of the Boltzmann constant, which relates the amount of thermodynamic energy in a substance to its temperature. When the revised SI was approved in November 2018, the new definition of the kelvin as the SI unit of thermodynamic temperature set its magnitude by fixing the numerical value of the Boltzmann constant to be equal to exactly 1.380649 × 10-23 joules per kelvin.

Obviously, successful and accurate calibration is more difficult, if not impossible, if the sensor is installed in its final system and is difficult to remove or access. It’s even more challenging if the sensor is in actual use and there’s an issue with the credibility of the reading. In general, it is nearly impossible to calibrate a sensor while in an active system. For this reason, some mission-critical applications use two and even three sensors with some sort of voting scheme. This brings new complexity, confidence, and cost issues since the additional circuitry can bring new problems.

The credibility of sensors and their readings is a major consideration in consumer products, which are increasingly “sensor platforms” such as cars. Their dozens of sensors measure temperature, pressure, fluid and gas flow, gas concentrations and constituents, and more. It is simply not practical to have multiple sensors in each of these cases, nor would it necessarily increase confidence in the readings,

Instead, a different approach is used. Designers of these products step back and look at the bigger picture of what a related set of sensors is reporting if it all makes sense. Then, using a deep understanding of the system interactions, they can say that if, for example, the temperature of the exhaust gases rises outside of the acceptable range, then there should also be a corresponding change in some other sensed variable associated with that gas, such as its flow rate. Doing this requires advanced insight into system interactions, correlations, and associations so the supervisory system can decide if an individual sensor has failed or gone out of calibration or if there is a genuine performance issue. Getting to the point where the “check engine” light can be turned on can be a long and convoluted journey.

The next part of this article will look at a common and supposedly simple example of checking the temperature of a standard home gas oven and why it is not simple at all.

EE World Online Related Content

What are cryogenic temperature measurements? Part 1

What are cryogenic temperature measurements? Part 2

Solid-state temperature sensing Part 1 — principles

Solid-state temperature sensing, Part 2 – application

The proving ring – An alternative to dead-weight calibration, Part 1

The proving ring – An alternative to dead-weight calibration, Part 2

References

Fluke, “How to Calibrate a Thermocouple

Fluke, “9118A Thermocouple Calibration Furnace”

Omega Engineering, “Common Techniques to Calibrate Thermocouples”

Electronic Design, “IR Thermometer Reads to 0.001°C with Accuracy, Stability”

NIST, “Kelvin: Introduction”

NIST, “SI Units – Temperature”

NIST, “The Calibration of Thermocouples and Thermocouple Materials”

IEEE Spectrum, ““Nathan Myhrvold’s Recipe for a Better Oven”

Ampleon, “RF Solid State Cooking White Paper”