Gesture recognition is a user interface that allows computers to capture and interpret non-verbal communication, including facial expressions, head movements, body positioning, and hand motions as commands. Hand gestures are an increasingly common mode of computer control, and the types of sensors used to recognize hand gestures is growing.

This FAQ briefly reviews how hand gesture controls are implemented today, looks at possible future applications for hand gestures, and closes with a survey of various types of non-video sensors used to recognize, interpret and respond to hand gestures, including e-field sensing, LIDAR, advanced capacitive technology, and haptics.

Originally, all gesture recognition relied on the interpretation of real-time video feeds. Basic video-based gesture recognition is still widely used. It’s a compute-intensive process and works as follows:

- A camera feeds image data, paired with data from a depth-sensing device (often an infrared sensor), into a computer to capture the dynamic gesture in three dimensions.

- Gesture recognition software compares the captured image data with a gesture library to find a match.

- The software then matches the recognized gesture with the corresponding command.

- Once the gesture has been recognized and interpreted, the computer either confirms the command desired by the user or simply executes the command correlated to that specific gesture.

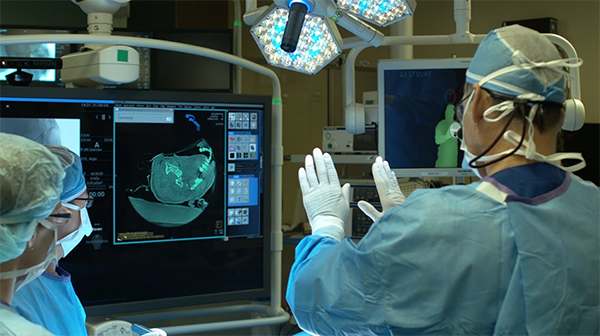

In complex environments, the basic video information can be augmented using skeletal and facial tracking, plus voice recognition and other inputs (Figure 1).

Automotive applications

Automotive interiors are a good example of emerging uses for video-based gesture recognition systems. Current automotive gesture recognition systems enable drivers and sometimes passengers to control the infotainment system or incoming phone calls without touching buttons or screens. In this environment, gesture recognition is expected to provide enhanced safety since drivers can use simple hand motions in place of complex menu interfaces, enabling them to focus more completely on driving the vehicle.

Voice-controlled systems also enable the driver to focus on the road and be more complex to use. Most current voice control systems do not use natural language, they require exact phases, and long menu chains can be involved to arrive at the specific command desired. Due to their simplicity for users, automotive applications for gesture recognition are expected to expand to other systems such as heating and cooling, control of interior lighting, telematics systems, and even connection with remote smart home systems. And systems combine the best properties of voice recognition and gesture recognition.

The camera is mounted in a camera-based automotive interior gesture recognition system to get an unobstructed view of the relevant interior space, usually from a high vantage point such as the ceiling. Current systems are focused only on the driver. In the future, as the quantity of cameras in the vehicle interior increases and the image quality improves, the scope of the monitored space is expected to expand to include passengers. The monitored area is illuminated with infrared LEDs or lasers to provide the best possible image quality even in low light conditions. As described above, the gestures are analyzed in real-time, and machine learning supports continuous improvement in accuracy. Some of the hand gestures recognized by BMW 7 Series cars are illustrated in (Figure 2).

Figure 2: Examples of gestures programmed into BMW Series 7 cars. (Image: Aptiv)

Figure 2: Examples of gestures programmed into BMW Series 7 cars. (Image: Aptiv)

E-Field Gesture Recognition

Electric Field Proximity Sensing (EFPS) is based on the perturbation of an electric field by a nearby object that is at least slightly conductive. One embodiment of EFPS is a microelectronic device that can detect both moving and stationary objects, even though non-conductive solid materials. It operates by sensing small changes in a very low-power electromagnetic field generated by two antenna electrodes. It has a range that’s adjustable from a few centimeters to 4 meters, and its operation is independent of the impedance to ground.

EFPS and other e-field sensors provide small amounts of data. They are smaller, weigh less, and require less power than optical gesture recognition systems. In another embodiment, a gesture sensing IC uses electrodes to sense variations in an e-field and calculate the position of an object such as a finger, delivering position data in three dimensions and classifying the movement pattern into a gesture in real-time (Figure 3). By using e-field sensing, the system is completely insensitive to light, sound, and other ambient conditions that can interfere with the operation of other 3D gesture sensing technologies.

This specific 3D gesture sensing IC is optimized for battery-powered devices, and the sensing electrodes are driven by a low voltage signal with a selectable signal at 42, 43, 44, 45, and 100 kHz signal. Because e-field sensors can penetrate non-conductive materials, they can be packaged in weather-proof enclosures or the interior walls of buildings. In addition to portable gesture sensing applications, EFPS systems are current deployed in a variety of sensing applications, including:

- Robot manipulators that can determine properties of the object being grasped

- Automotive airbag systems to determine whether or not a seat is occupied

- Building automation systems to determine when a room is unoccupied

Using LIDAR

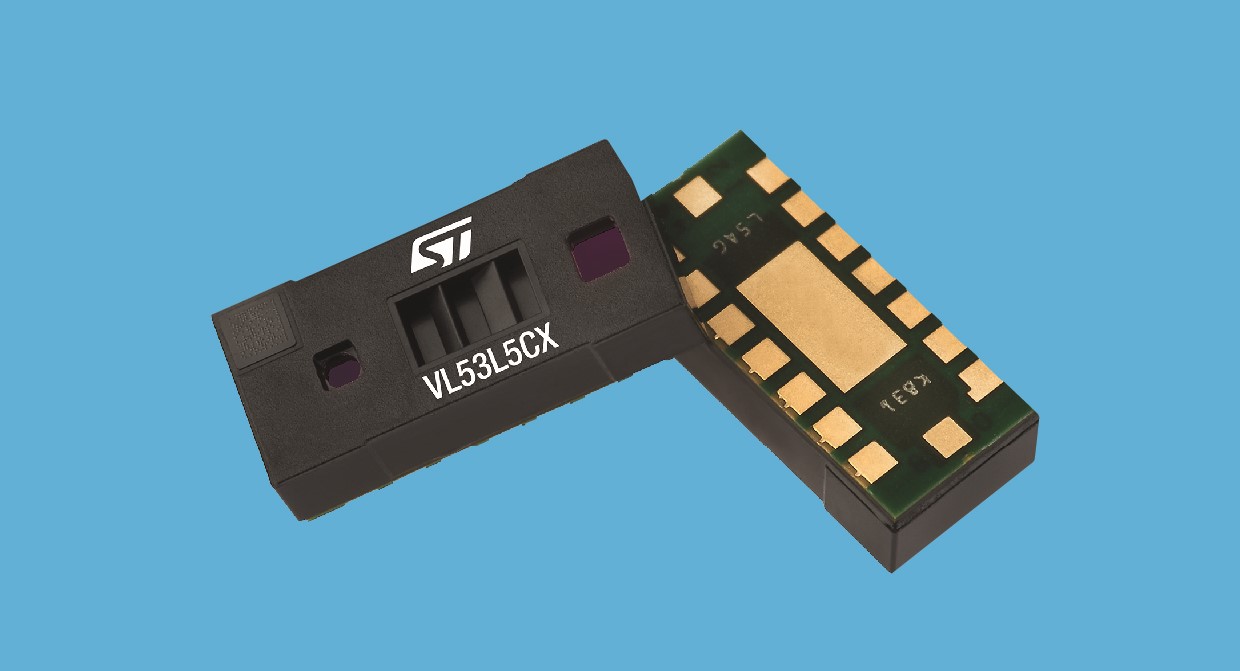

Light detection and ranging (LIDAR) is being used to bring a unique range of performance to gesture recognition in consumer and industrial systems. One example is a LIDAR device based on a 940 nm invisible light vertical-cavity surface-emitting laser (VCSEL) with an integrated driver and a receiving array of single-photon avalanche diodes (SPADs). The system uses multizone ranging based on time-of-flight (ToF) measurements. It’s delivered as an integrated 6.4mm x 3.0mm x 1.5mm module that includes the VCSEL (Vertical Cavity Surface Emission Laser) emitter and a receiver with embedded SPADs and histogram-based ToF processing engine (Figure 4).

The compact size and low power consumption of this LIDAR based module are expected to enable the integration of touch-free gesture recognition in a range of applications, including AR/AV headsets, tablets, mobile phone handsets, and residential products such as kitchen appliances, thermostats, and other smart home controls, and equipment such as elevator controls, interactive signage and ticketing, and vending machines. This sensor can provide up to 60 frames per second in a 4×4 (16-zone) fast-ranging mode. In high-resolution mode, the sensor measures 64 zones (8×8).

Shrinking capacitive gesture recognition

Capacitive three-dimensional gesture sensors based on miniature carbon-nanotube paper composite capacitive sensors have been developed to integrate gaming devices and other consumer electronics. Compared with previous-generation capacitive gesture sensors, the carbon-nanotube paper-based device is 10x faster and 100x smaller and operates at greater ranges of up to 20 cm (Figure 5). They recognize 3D gestures without any hand appliance or other device and are faster and more accurate than infrared sensors. In addition, they are insensitive to environmental factors such as skin tones and lighting conditions.

Figure 5: This carbon-nanotube paper-based gesture recognition device is 10x faster and 100x smaller than previous generations of capacitive sensors. (Image: Somalytics)

Figure 5: This carbon-nanotube paper-based gesture recognition device is 10x faster and 100x smaller than previous generations of capacitive sensors. (Image: Somalytics)

Cameras + ultrasound haptics

A new system designed for VR/AR headsets combines IR camera-based gesture recognition with haptic feedback. The system uses IR LEDs to illuminate the user’s hand, and the LEDs are pulsed in synchronization with the camera framerate. The camera sends the current location information to the processor with each pulse. The gesture recognition software in the processor models the bones and joints as well as the hand movement. That enables the system to accurately know the position of a thumb or finger, even if it’s not in an open line of sight. The system can be programmed to recognize a wide range of gestures, including grabbing, swiping, pinching, pushing, and more. This gesture recognition system has an interactive zone that ranges from 10 cm to 1 meter with a typical field of view of 170° x 170°.

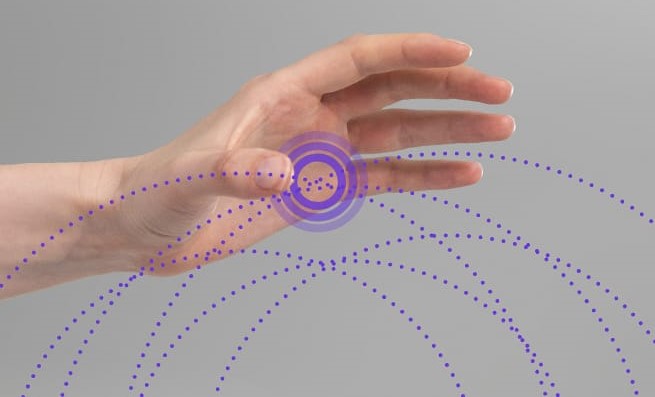

In addition to recognizing gestures, the system knows where a person’s hand is and can use that information to control haptic feedback based on ultrasonics. The ultrasound haptics system is based on a matrix of speakers triggered with specific time differences that enable the sound waves to be focused at a specific point in space, for example, where a particular part of a person’s hand is positioned (Figure 6). The 3D focal point can be changed in real-time as the application requires. The combined vibrations of the ultrasound waves at the focal point create a pressure point that can be felt by human skin.

Summary

Video-based gesture recognition is still the most widely used form of gesture recognition. It is used in various applications, including medical environments and automotive cabins. More recently, gesture recognition has been applied to AV/VR systems, building automation systems, and robotics. The growing applications for gesture recognition are being enabled by new gesture recognition technologies, including e-field sensing, VCSEL-based LIDAR systems, carbon nanotube capacitive devices, and IR cameras combined with ultrasound haptics feedback.

References

E-field basics, Microchip

Touch is going virtual, ultraleap

What is gesture recognition?, Aptiv

What is gesture recognition? Gesture recognition defined, 3D Cloud by Marxent