A wide range of sensor technologies is required to support augmented reality and virtual reality (AR/VR) systems. Today’s AR/VR implementations are mostly focused on visual and audio interfaces and rely on motion tracking and listening/voice recognition sensors. That’s beginning to change with new types of sensors and various types of haptics being introduced. This FAQ begins by reviewing several of the basic position sensing technologies used in current AR/VR systems, presents a proposed AR/VR system for neurorehabilitation and quality of life improvement, reviews how thermal sensing technologies are being developed to provide more complete AV/VR environments and closes by looking at emerging haptics technologies for thermal- and touch-based feedback.

AR involves the creation of an environment that integrates the existing surroundings with virtual elements. In a virtual reality environment, the system creates the complete ‘reality’ and only needs to know about the person’s relative movements and orientation. A basic VR system uses an inertial measurement unit (IMU) that can include an accelerometer, a gyroscope, and a magnetometer. An AR system needs to know where the person is, but it also needs to understand what the person is seeing, what the person is hearing, how the environment is changing, etc. As a result, AR uses more complex sensing, beginning with an IMU and adding time-of-flight sensors, heat mapping, structured light sensors, etc.

Both AR and VR are immersive environments, especially for VR, complete immersion is necessary for a good user experience. The potential for motion sickness is a challenge when developing AR/VR systems. AR/VR devices must capture user movements quickly and accurately to avoid motion sickness. The IMUs and other motion sensor technologies must be highly stable with very low latencies. IMUs used in AR/VR systems combine an accelerometer, a gyroscope, and a magnetometer to facilitate error correction and quickly produce accurate results, enabling the system to track head movement and position.

In addition, both AR and VR systems can benefit from various forms of user feedback and interaction including gesture and voice recognition. Gesture recognition can be based on real-time video analysis (which can be energy and compute-intensive) or more advanced technologies such as e-field sensing, LIDAR, and advanced capacitive technologies. For a discussion of gesture recognition, see the FAQ “How can a machine recognize hand gestures?”

One key for AR environments is to accurately, quickly, and continuously present computer-generated images of the actual environment. Most AR headsets rely on one or more special types of imaging sensors including; time of flight (ToF) cameras, vertical-cavity surface-emitting laser (VCSEL) based light detection and ranging (LiDAR), binocular depth sensing, or structured-light sensors. Some use a combination of these sensors.

ToF cameras combine a modulated IR light source with a charged coupled device (CCD) image sensor. They measure the phase delay (time of flight) of the reflected light to calculate the distance between the illuminated object and the camera. Thousands, or millions, of these measurements, combine into a ‘point cloud’ database that represents a three-dimensional image of the surrounding area. A more recently developed technology, VCSEL-based LiDAR can produce higher fidelity point clouds. In addition, VCSEL technology is used in smart glasses and other wearable devices to produce more compact and lower power displays and gesture recognition systems.

Structured light sensors project a defined pattern of light (IR or visible) onto the surroundings. The distortion of the patterned light is analyzed mathematically to triangulate the distance to various points in the surroundings: the camera pixel data (a type of primitive point cloud) is analyzed to calculate the difference between the projected pattern of light and the returned pattern, taking into account camera optics and other factors to determine the distances to various objects and surfaces in the surroundings. While a single structured light sensor can be used, more accurate results are realized when two structured light sensors are used and their outputs are combined.

Additional sensors found in AR systems include directional microphones (which may be displaced in the future by bone-connection directional audio transducers), various biosensors such as thermal sensors, ambient light sensors, and forward and rear-facing video cameras. Plus, a wireless link is needed to download all of the sensor data and upload the video information for the real-time creation of an immersive and dynamic environment.

Neurorehabilitation

AR/VR technologies are being developed for a range of medical applications. In one instance, a system for immersive neurorehabilitation exercises using virtual reality (INREX-VR) is being developed based on VR technologies. The INREX-VR system captures real-time user movements and evaluates joint mobility for both upper and lower limbs, recording training sessions and saving electromyography data. A virtual therapist demonstrates the exercises and the sessions can be configured and monitored using telemedicine connectivity.

The INREX-VR software was developed to use off-the-shelf VR hardware and is suited for use by patients suffering from a neurological disorder, therapists responsible for supervising the rehabilitation of patients, and trainees. The system can evaluate the emotional condition of the user through heart-rate monitoring and their level of stress using skin conductance. Facial recognition can also be implemented when the system is used with the VR headset.

Heating up future AR/VR

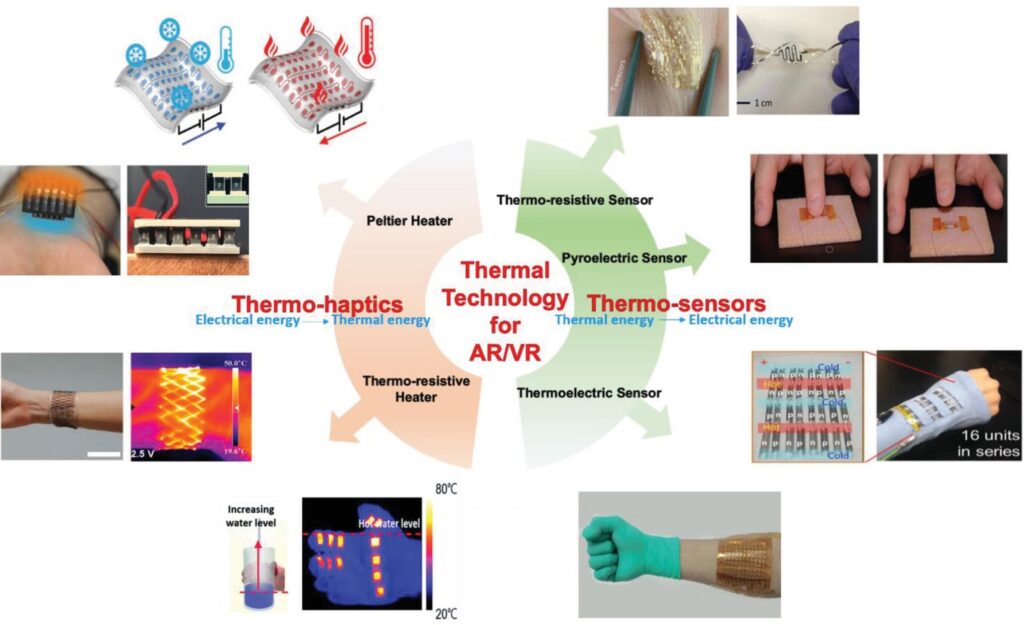

Devices that can add a thermal dimension to AR/VR environments are a ‘hot topic.’ Various thermal sensing and thermal haptics technologies are being pursued (Figure 3). Thermal sensing technologies proposed for AR/VR systems include thermoresistive, pyroelectric, thermoelectric, and thermogalvanic. While these sensors convert thermal energy into electric currents or voltages, the power levels are too low to support energy harvesting but are sufficient to enable thermal sensing. In particular, polymer composites and conducting polymers are expected to find use in future thermal sensors. The mechanical flexibility of polymers makes them especially suitable for wearable thermal sensors. Some of the proposed polymer-based thermos sensors can function in contact and non-contact modes, further increasing flexibility. Stability of performance and reaction delays resulting from thermal hysteresis are two challenges before thermos-sensors are ready for use in AV/VR systems.

Thermal haptics or thermal stimulation is another emerging area of AR/VR that will provide an enhanced level of immersion with AR/VR environments. Thermal haptics are expected to rely on thermoresistive heaters or Peltier devices, which control the temperature or a target area but are more power-hungry than polymer-based thermal sensors. On the plus side, active thermal haptics may have faster response times than the proposed polymer-based thermal sensors. With thermal haptics, users will be able to feel the temperature of a virtual object and have a more realistic interaction with their environment.

Thermoresistive heaters may have lower hysteresis than Peltier devices, but thermoresistive heaters only provide heating, while Peltier devices can provide both heating and cooling. A key factor in developing thermal haptics will be the need for user controls that enable the selection of a range of temperatures that provide stimulation without causing discomfort or burns. New materials will be needed for wearable Peltier devices that are both flexible and lightweight. Materials development is a key activity to enable both thermal sensing and thermal haptics in future AR/VR systems.

Ultrasound haptics

Ultrasound-based haptics have been developed based on the precise control of a series of speakers that send out precisely-timed ultrasound pulses. The time differences are designed so that the ultrasound waves from the various speakers arrive at the same place simultaneously and create a pressure point that can be felt by human skin (Figure 4). The focal point where the ultrasound waves meet can be controlled in real-time and changed from moment to moment based on hand positioning or other factors.

A tracking camera serves the dual purposes of gesture recognition and provides the exact position of a person’s hand, enabling the focal point to be positioned at a specific spot on the hand. By controlling the motion of the focal point it’s possible to create multiple tactile effects. Haptic feedback is expected to use AV/VR systems beyond gaming, for example, system controls and interactions for shopping kiosks and vending machines, automotive interiors, building automation, and other areas.

Summary

Sensors are a key area of technology that enable compelling and immersive AR and VR environments. Due to the greater complexity of creating AR environments that stitch together the surrounding reality with virtual elements, AR sensor systems are more complex. New sensor modalities are being developed to increase the realism of AR/VR systems, including thermal sensors and thermal and touch haptics.

References

Consumer AR Could Have Saved Lives, Economy, Association for Computing Machinery

Emerging Thermal Technology Enabled Augmented Reality, Advanced Functional Materials

Flexible Virtual Reality System for Neurorehabilitation and Quality of Life Improvement, MDPI sensors

Sensors for AR/VR, Pressbooks

Touch is going virtual, ultraleap