Today, advanced sensing applications in smart phones and automobiles frequently use sensor fusion. However, advanced industrial applications such as sophisticated robots also take advantage of this technology. Sensor fusion is actually a subcategory of data fusion and is also called multisensory data fusion or sensor-data fusion.

For location sensing in smart phones the data from accelerometers, gyroscopes and magnetometers is combined to provide a better result than can be achieved by any of these sensors alone. In cars, the data from radar, LiDAR and cameras as well as maps and other data sources is combined to make decisions in autonomous driving situations. In robots, the data from vision systems and inertial measurements units (IMUs) is combined to improve the movement of robotic arms and increase the sampling rate.

The military has been a pioneer in sensor-data fusion to “obtain a lower detection error probability and a higher reliability by using data from multiple distributed sources.”

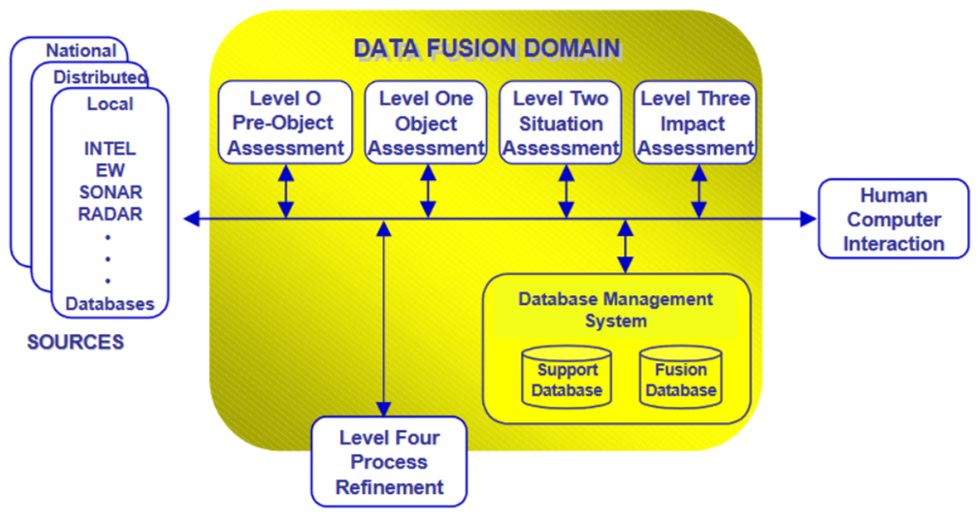

In 1987, the U.S. Department of Defense (DOD) Joint Directors of Laboratories (JDL) Data Fusion Subgroup developed a definition of data fusion which was further refined as a ” multilevel, multifaceted process dealing with the automatic detection, association, correlation, estimation, and combination of data and information from single and multiple sources.” A graphic indicating various calculations and data sources is shown in Figure 1. Figure 2 shows the technology requirements of each of the items identified in Figure 1.