As robots become increasingly autonomous, sensor fusion is growing in importance. Sensor fusion merges data from multiple sensors on and off the robot to reduce uncertainty as a robot navigates or performs specific tasks. It brings multiple benefits to autonomous robots: increased accuracy, reliability, and fault tolerance of sensor inputs; extended spatial and temporal coverage from sensor systems; improved resolution and greater recognition of surroundings, especially in dynamic environments; sensor fusion can reduce robot cost and complexity by using algorithms that take care of the data preprocessing and allow various kinds of sensors to be used without altering the basic robot application software or hardware complexities.

Sensor fusion is used by autonomous mobile robots, stationary robots, aerial robots, and marine robots. A simple example of sensor fusion is a wheel encoder fused with an inertial measurement unit (IMU) to help identify the position and orientation of a robot. These robots are often found in dynamic environments in warehouses and factories. The added fusion of information from external cameras placed at strategic locations around the facility can further enhance a robot’s ability to navigate various fixed and dynamic obstacles successfully.

Another example is fusing Global Navigation Satellite System (GNSS) location information with IMU sensors to provide more robust information about robot positions. That can be especially useful in GNSS-denied locations, such as the dead spots in many industrial buildings, where the information from an IMU can be fused with encoder odometry to continue providing reliable position information. And the inertial sensor brings the added dimension of information about speed. Sensor fusion results in greater resolution as well as better information.

Industrial aerial robots

Aerial robots are another example of platforms that use GNSS for location and navigation. Increasingly, however, industrial robots are being used in GNSS-denied environments such as warehouses or in infrastructure, oil and gas, and other areas with significant obstructions for the GNSS signals. In the 3D environment where aerial robots and drones operate, conventional sensor fusion results in computational complexity and higher energy consumption, neither desirable. In addition, the added capabilities add weight and can limit the payload capabilities of the platforms.

A variety of alternative sensors technologies are being applied to overcome those limitations. Examples include cameras in motion capture systems, vision, LIDAR, radio beacons, or even map-based approaches. Each of those options has strengths and weaknesses.

Motion capture systems consist of a network of cameras that allow accurate tracking of the drone. While they are accurate, they require a clear line of sight between the drone and several cameras to guarantee good performance. They are not suited for cluttered or dynamic environments, and they may not be cost-effective when scaled to larger environments.

Vision-based approaches can be cost-effective and lightweight. Sensor fusion can combine monocular cameras with IMUs to implement simultaneous localization and mapping (SLAM) algorithms. Weaknesses of this approach are related to the high-speed movements of drones or when the drones are used in large empty spaces. In either case, vision-based sensor fusion does not provide a robust solution and can be subject to low long-term reliability. In addition, it can be highly susceptible to variable lighting conditions.

Numerous fusion algorithms are available for integrating LIDAR with IMUs and even mapping approaches. By combining several sensor modalities, this approach appears to be promising. However, LIDAR systems can be heavy and expensive. Additional limitations are related to the high speed and high vibrations inherent with aerial robots that result in drift and a lack of repeatability.

Maps of the environment can be loaded into the drone and used for localization. That can be particularly useful in industrial environments that do not change in the short term. The map can be developed offline and usually results in good levels of reliability with modest computational requirements. Adaptive Monte Carlo localization (AMCL) is a common algorithm used with map-based navigation. A probabilistic algorithm uses a particle filter to estimate the drone’s position on the map. It can be fused with additional sensor inputs such as IMUs and audio sensors to improve performance. AMCL evaluates and adapts the number of particles to optimize the use of computing resources. An open-source version of AMCL is available in the robot operating system (ROS). However, it’s designed for mobile robots in a 2D environment and must be adapted for aerial drones.

Localization systems based on radio beacons can provide a low-cost method of determining point-to-point distances without requiring line-of-sight between the various beacons. Recently, Ultra-wide band (UWB) wireless technology has been developed to provide accurate tracking and localization of drones. In GNSS-denied environments, UWB can be used with several fixed transponders at predetermined positions in the environment, along with a sensor on the drone to provide robust localization data. However, these sensors only provide a part of the solution since they lack orientation information. As shown in the following section, ultrasonic technology can be used in place of UWB to provide cost-effective and reconfigurable beacon systems in certain 3D environments.

Ship cleaning robot

Sensor fusion can create robots that perform tasks considered dangerous or potentially harmful for human operators. For example, the maintenance of ship hulls requires inspection, paint stripping, and repainting when the ship is in drydock. Paint stripping, in particular, is both hazardous and potentially harmful to people. The first robots developed for paint stripping in shipyards were not autonomous and required constant human monitoring and control to maintain a consistent result.

An autonomous self-evaluating hull cleaning and paint stripping robot has been developed recently. Called Hornbill, it can navigate autonomously. Hornbill includes a deep convolutional neural network-based evaluation algorithm to monitor and control cleaning results. When in operation, Hornbill uses a 3000-bar hydro blasting nozzle to clean the hull and strip paint in a predefined workspace. The basic navigation, location tracking, and other functions are performed under control of the ROS.

Accurate position information is critical to Hornbill’s successful operation. Unfortunately, conventional sensors cannot be used to determine a location based on feature tracking and sensor fusion with LIDAR-based simultaneous localization and mapping (SLAM) and visual SLAM. Since Hornbill uses an open water jet blasting function, neither LIDAR nor visual SLAM sensors can provide accurate information.

Instead, Hornbill uses a wirelessly networked ultrasonic-based beacon system that provides accurate position information. The mobile beacon on Hornbill has an internal IMU sensor, and fusion between the external beacon signals and the IMU sensor provides a highly accurate determination of position. The relative 3D position within the predefined boundary of the static beacons is determined by sensing the reflected ultrasonic waves between the stationary beacons and the mobile beacon on Hornbill. The coordinates are converted into ROS 3D pose (position) messages and control Hornbill’s movements.

Summary

Sensor fusion is an important tool enabling autonomous robots to perform complex tasks consistently. It often includes sensors on the robot and sensors in the environment combined to provide highly reliable location information. External sensors range from GNSS signals to cameras and various active beacon technologies. On-board sensors vary widely depending on the environment and the demands on the robot and can include IMUs, cameras, LIDAR, and map-based approaches. The continued development and refinement of sensor fusion will be important to develop cost-effective autonomous mobile and fixed robot platforms.

References

Hornbill: A Self-Evaluating Hydro-Blasting Reconfigurable Robot for Ship Hull Maintenance, IEEE

Multi-Sensor Fusion for Aerial Robots in Industrial GNSS-Denied Environments, MDPI

Multi-sensor Fusion for Robust Device Autonomy, Edge AI and Vision Alliance

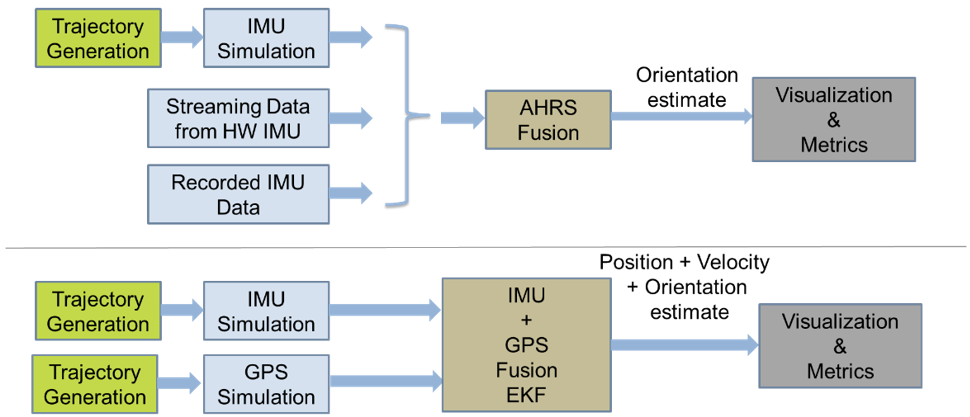

Sensor Fusion using the Robot Operating System, Automatic Addison

State Estimation and Localization Based on Sensor Fusion for Autonomous Robots in Indoor Environment, MDPI